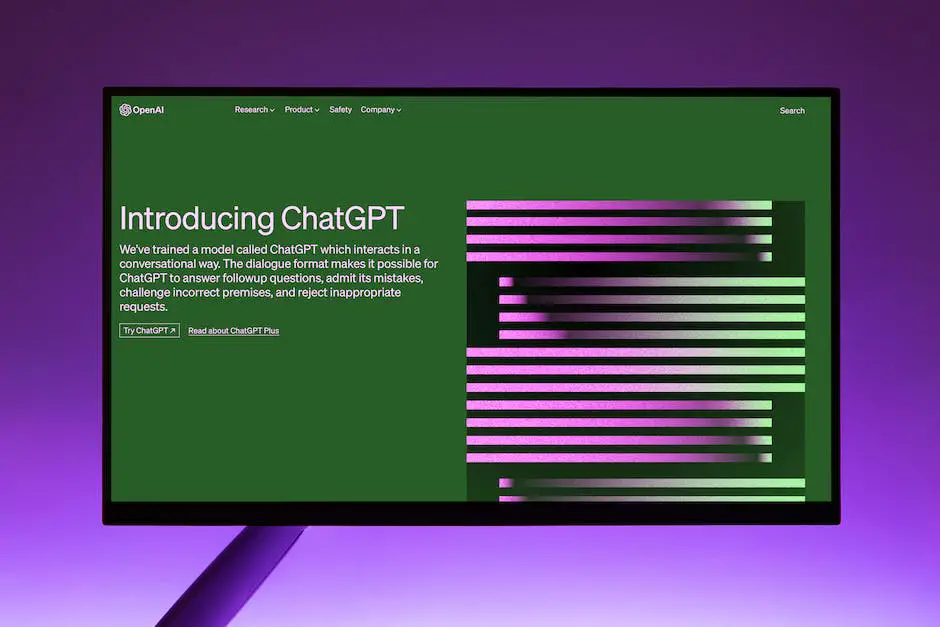

With the rapid advancement of AI and machine learning technologies, the ability to leverage these powerful tools in creative applications has become an essential skill. One of these advancements is OpenAI’s GPT-3 model, and more specifically, ChatGPT. Outperforming its predecessors, ChatGPT’s capability in understanding and generating human-like text makes it a valuable tool for various tasks such as prompt generation, conversation, language translation, and much more. This guide will offer a comprehensive understanding of the technology running ChatGPT, explore the OpenAI API’s ins and outs, dive into hands-on coding experience with ChatGPT, and guide you through the best practices of testing and improving your chatbot’s performance. By the end of this journey, you’ll be able to confidently interact with and capitalize on the immense potency of ChatGPT.

Understanding ChatGPT

Understanding OpenAI’s GPT-3 Model

OpenAI’s GPT-3 (Generative Pretrained Transformer 3) is a state-of-the-art automatic language model that uses machine learning to generate human-like text. This revolutionary model can generate its own text by predicting the likelihood of a word given the previous words used in the text. It’s capable of tasks such as translation, question-answering, summarizing, and now coding in different languages.

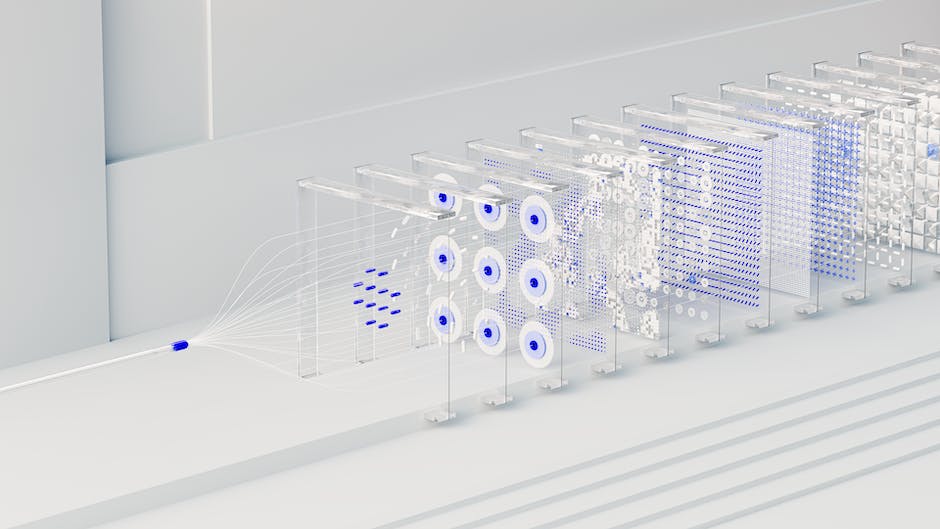

Technology Behind GPT-3

GPT-3 employs deep learning, a form of artificial intelligence (AI) that models patterns of human decision-making. Deep learning uses computational models known as neural networks, which are designed to mimic the way brains work. GPT-3 is powered by a type of neural network called a transformer, which processes input data in parallel and identifies patterns across the data.

Capabilities of GPT-3

The GPT-3 model has remarkably diverse capabilities. It can understand context, generate coherent sentences, mimic writing styles, translate languages, answer trivia, summarize long documents, create conversational agents, tutor in a variety of subjects, draft emails or other pieces of writing, write Python code, create Python-powered games, and even craft poems.

Functionality of GPT-3

To utilize GPT-3’s functionality, you feed it a series of words or a “prompt”, and it generates a text continuing the prompt. It can continue a text in a consistent tone, style, and context, which make it seem like the text was written by a human.

Understanding Transformer Models

A key component of GPT-3 is its use of transformer models. These models revolutionized the field of natural language processing by applying the concept of attention to neural networks. These models look at an input sequence all at once and transform it into an output sequence, replacing the recurrent layers previously used in natural language processing task. At its core, the transformer model relies on self-attention mechanisms to weigh the importance of words in an input sequence.

Natural Language Processing Fundamentals

Natural Language Processing (NLP) is a branch of AI that deals with the interaction between computers and humans via natural language. The ultimate objective of NLP is to read, decipher, understand, and make sense of the human languages in a valuable way. Chatbots like ChatGPT use NLP and machine learning to understand, process, and respond to human language intelligently and in a natural, human-like way.

Working with ChatGPT

To code with ChatGPT, first understand the prompt that you need to provide. The trick is to make the prompt as explicit and detailed as possible, to guide the model towards generating the desired output. Use the OpenAI API playground or any other interface tool that supports the OpenAI API to interact with GPT-3. With careful instructions and the right set-up, ChatGPT can be a powerful tool for generating natural language responses that feel intuitive and human-like.

Exploring the OpenAI API

Understanding the OpenAI API

The OpenAI API is an interface that allows you to interact with models like ChatGPT. It acts as the bridge between your application and the advanced AI technologies developed by OpenAI. You can use the API to send ‘prompts’ to the AI models, specify ‘tokens’ for response length, and decide the ‘temperature’ for randomness in the AI model’s answers.

Sending Requests to the OpenAI API

To send a request to the OpenAI API, you will typically use HTTP POST requests. The requests comprise the path to the API endpoint (https://api.openai.com/v1/engines/davinci-codex/completions), headers for authentication, and a payload that contains the input message or ‘prompt’ to the model. For example:

POST /v1/engines/davinci-codex/completions HTTP/1.1

Host: api.openai.com

Authorization: Bearer YOUR_API_KEY

Content-Type: application/json

{

"prompt": "Translate the following English text to French: '{}'",

"max_tokens": 60

}

This HTTP POST example to the OpenAI API sends a request to translate English text to French. Note that the placeholder for the text to be translated is ‘{}’. You should replace it with the text you want to be translated. You must also replace ‘YOUR_API_KEY’ with your actual OpenAI API key for the request to succeed.

Formatting Inputs and Outputs

When sending inputs and receiving outputs from the OpenAI API, the data is structured in JSON format. Your input message, or ‘prompt’, goes in the ‘prompt’ field, and additional parameters like ‘max_tokens’ (maximum response length) and ‘temperature’ (randomness of AI response) go within the same JSON payload.

The API’s response will also be in JSON format, including the ‘id’, ‘object’, ‘created’, ‘model’, and ‘choices’ details. The AI model’s response, or completion, will be in the ‘choices’ array.

Adjusting Parameters for Different Communication Styles

You can tweak the ‘temperature’ and ‘max_tokens’ parameters to modify the AI model’s communication style. Higher ‘temperature’ values (closer to 1) make the responses more random, and lower values (closer to 0) make the responses more deterministic and focused.

Set ‘max_tokens’ to limit the response length. This parameter sets an upper cap on how long the AI’s response can be.

Remember that effective usage of these parameters is an art and requires experimentation and fine-tuning. These parameters are pivotal in making the interaction with AI more intuitive and user-centric.

Coding with ChatGPT

Understanding ChatGPT

ChatGPT, developed by OpenAI, is a conversational AI model that uses machine learning techniques to generate human-like text based on prompts. It can be used in various applications, including task completion, data generation, conversational understanding applications and more.

Getting Started with ChatGPT

Before you start coding with ChatGPT, ensure you have a good knowledge of Python as the OpenAI API uses Python. To work with ChatGPT, you need an API key from OpenAI, which is provided when you sign up on their website.

Setting up the Environment

To begin, install the OpenAI Python client. Create a new Python file and import the OpenAI library. Also, ensure your API key is set up, either directly in your code or in your environment. You can use the os library to set up environmental variables in Python.

import os

import openai

openai.api_key = 'your-api-key'

Executing a Basic Chat in Python

To execute a basic chat conversation with ChatGPT, you need to call the ‘openai.ChatCompletion.create()’ function with an ‘model’ attribute and the ‘messages’ attribute. The ‘messages’ attribute is an array that contains conversation history and user/passistant messages. The role of ‘user’ is used for user inputs and ‘assistant’ is used for the model’s output messages.

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Who won the world series in 2020?"},

]

)

Building a More Complex Use Case

A more complex usage scenario could involve generating more versatile prompts and responses. For example, using the system’s role to guide the conversation, and using the user’s role to ask the model for information or actions.

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Translate this English text to French: 'Hello, how are you?'"},

]

)

Handling Long Conversations

For longer conversations, you might have to manage tokens as the model has a maximum limit. Each API call is counted in terms of tokens. For English, a token can be as short as one character or as long as one word such as “a” or “apple”. You need to design your conversation history and future prompts in such a way that they don’t exceed the model’s maximum limit for tokens.

Analyzing the Code’s Response

The model’s response can be extracted from the API’s result through the following code:

response['choices'][0]['message']['content']

The above code will print out the assistant’s reply.

Remember:

Coding with ChatGPT involves learning how to structure chat interactions, handle responses, and interact with API. The key is to experiment with different tasks and fine-tune the model’s parameters to achieve the desired results.

Testing and Improving Chatbot Performance

1. Understanding Chatbot Performance Metrics

The first step for improving a chatbot’s performance is to understand the metrics used to measure its effectiveness. Accuracy, for instance, refers to the percentage of user requests that the chatbot is able to handle correctly. Additionally, the F1 score is another key metric which considers both precision (the proportion of true positive results among all positives) and recall (the proportion of true positives among actual positives). A confusion matrix is a table layout that visualizes the performance of an algorithm, typically a Supervised learning one (where true and false positives and negatives are known). It distinguishes between the number of accurate predictions and misclassifications.

2. Frequent Testing and Refining

To keep your chatbot in top shape, you should regularly perform tests. These can range from manual tests, where you engage with the chatbot as if you’re a user, to more complex automated tests. Automated tests are scripts or programs that simulate user interactions to test the behavior and responses of the bot. For instance, you can use automated testing for regression, unit or load tests. Regression tests ensure that improvements or changes do not adversely affect existing functionalities.

3. Utilizing a Confusion Matrix

Implementing a Confusion Matrix will allow you to understand your chatbot’s strengths and weaknesses. Each row in the matrix represents the instances from an actual class while each column represents the instances of a predicted class. Understanding the elements in the matrix (true positives, false positives, true negatives, and false negatives) can help pinpoint where the bot is struggling and how to improve its interactions.

4. Implementing a Feedback Loop

Feedback loops are crucial for improving your chatbot’s performance as well. Have a strategy in place for collecting and analyzing feedback from users. This could be as simple as asking users to rate the interaction, or as complex as analyzing user messages and responses for sentiment and common problems. Also, employ systems that learn and improve over time from the feedback recieved. Machine learning strategies, for instance, learn from user interactions and gradually improve the chatbot’s decision-making algorithms.

5. Analyze the Data

Examine all collected data and responses, looking for patterns and areas of deficiency in the chatbot’s responses. By doing this, you can learn how users interact with your bot and what they expect from it, helping to guide updates and improvements.

6. Continuous Training of the Bot

Ensure to feed your chatbot with the right data that is relevant to the user interactions it handles. Regular training sessions with updated datasets can help the bot understand emerging trends or changes over time in user inputs. With each new dataset, the bot gets smarter as it identifies the right patterns, understands the context better, and makes accurate decisions.

7. Implement Upgrades and Repeat

After analyzing the data, make changes, upgrades or improvements to the chatbot as necessary. Then, repeat the whole process. Test, get feedback, analyze, refine, and upgrade your bot to continually improve its performance over time. Remember, refining and training a chatbot is an ongoing process and takes time to get right.

By using these best practices, you will be on the way to having a highly performing chatbot that will enrich user experience.

Getting hands-on experience with ChatGPT, learning the mechanics behind its technology, and optimizing your chatbot’s performance are fundamental to mastering the interaction with AI models. Continually experimenting, testing, and improving your code will allow you to better understand the nuances of not just ChatGPT, but transformer models and natural language processing as a whole. After familiarizing yourself with this innovative cutting-edge technology, you will realize that the application of AI is not confined to just chatbots and AI conversations but has a wide array of practical and transformative applications in numerous fields. Harness the potential of ChatGPT, create effective chatbots, and embark on a journey of uncharted technological territories with confidence and expertise.